As product managers, we’re responsible not just for performing customer research and writing user stories, but also for the performance and the behavior of our product. Ensuring our product behaves correctly becomes harder yet more important as we gain more users.

Therefore, as part of writing a successful product spec, you should include information on how you expect the product to behave once you’ve implemented your new functionality.

One of the best ways to ensure that you understand the expected behavior of your product is to write up test cases. After all, if you understand how your product should behave under a multitude of scenarios, your product will be more robust.

So, how should we think through test cases as we create our product specs?

First, I’ll provide the structure of a single test case. Then, I’ll talk through a framework for how to generate test cases. Afterwards, I’ll provide an illustrated example for us to walk through together.

Let’s dive in!

Test Case Structure

What does a test case look like, and why does it matter?

A test case captures one user’s full set of interactions with your product. It should include the start of the flow, the path taken to get to the end, and the expected result of the flow.

So, a test case document for an online shopping product might look something like the following:

| # | Starting conditions | Path taken | Expected result | Actual result |

| 1 | User is logged in |

|

Shopping cart has 1 item | Shopping cart has 3 items – fail |

| 2 | User isn’t logged in |

|

New screen appears asking user to log in | New screen appears asking user to log in – pass |

From here, it’s pretty easy to see that there’s a problem with how the shopping cart adds items, but there’s no problem with how the shopping cart checks for a logged in user.

A well-written test case ensures that your development team knows what success looks like!

So, now that we know what a single good test case looks like, let’s think through how we might capture the relevant set of test cases we need for our new functionality.

A Framework for Test Cases

Writing just one test case isn’t enough. We need to capture the relevant set of test cases to capture all of the valid scenarios that a user might experience.

If we write too few test cases, we might miss edge cases that have undesirable outcomes. But, if we write too many test cases, we’re spending time testing for scenarios that would never happen, which is an inefficient use of scarce resources.

So how do we know what test cases we actually need to capture?

We can start by considering the existing behavior of our product. What does our product currently do? We should check to see whether our product’s existing behavior has documented test cases – if not, now is a good time to build that foundation and to create test cases for our existing product scenarios.

From there, consider the new behavior that you’ll be introducing. What new user flows have you introduced? Which existing flows have you modified, and how does it impact those flows? Are there any existing flows that you’ve removed due to your changes?

Again, each test case should represent one user’s full set of interactions with your product. In other words, you shouldn’t just test a single button – you should test the end-to-end flow that incorporates the button.

Any time we build functionality, we need to consider what dependencies we’ve introduced. For every dependency, we need to test the combination of our new functionality with the existing dependent functionality.

In cases where we’ve built functionality independent of other functions, we only need to test each option rather than each combination of options.

For example, if you build a feedback flow, you probably don’t need to test the checkout flow, because feedback and checkout are independent.

On the other hand, if your feedback flow only appears once you’ve successfully completed the checkout flow, you’ll need to test the entire set of flow combinations.

Illustrated Example

I’ve created some mock ups for us to work with, so that it’s a bit easier to visualize how to tackle test cases.

I’ll walk through the existing behavior of my hypothetical product, the proposed new behavior of my hypothetical product, and a breakdown of the test cases that I should cover.

Existing Behavior

Let’s say that I’m currently working on an existing feedback feature.

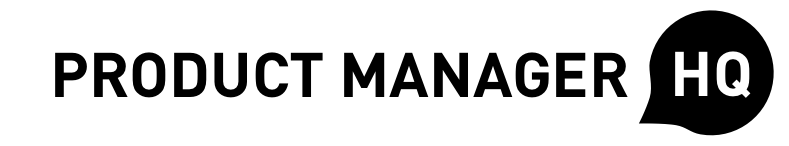

My feature only has two screens. The first screen looks like the below:

Notice that the user can select either “yes” or “no”, and they can also skip this section to jump back into the main product.

If the user selects “no”, then we’ll bring them back into the product.

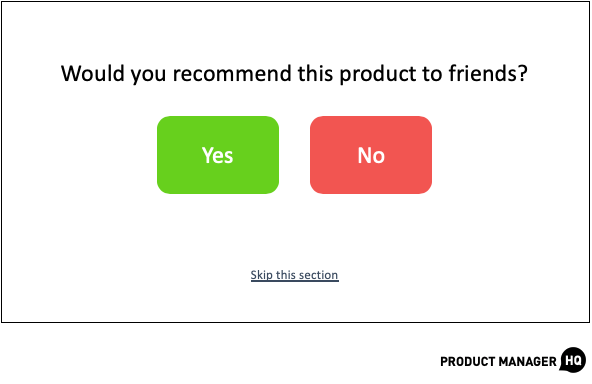

But, if the user selects “yes”, then my feature surfaces this second screen:

And that’s it! Pretty simple feature.

Proposed New Behavior

Let’s say that based on user research, we’ve found the qualitative feedback below.

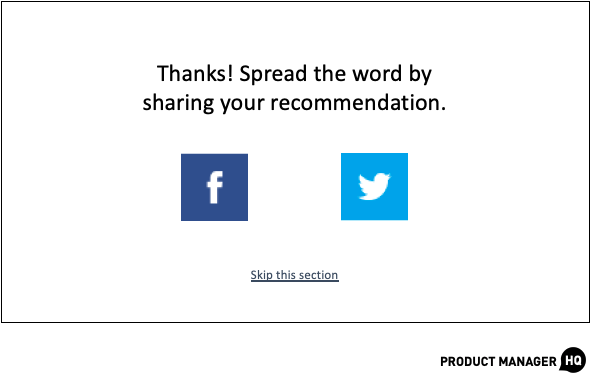

When users say that they’d like to recommend this product to friends, they’re frustrated that there’s not a quick way to share their recommendation.

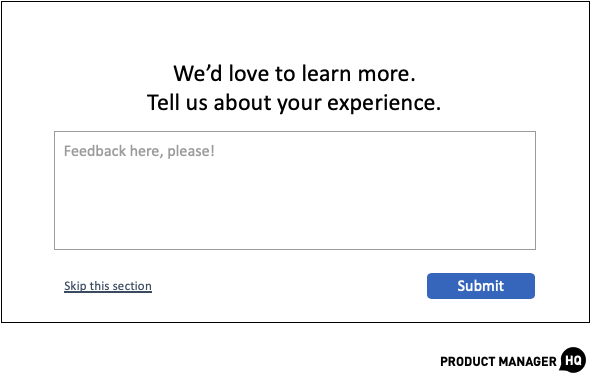

When users say that they didn’t enjoy this product, or when they say they wouldn’t recommend this product to friends, they’re frustrated that there’s no way for them to provide feedback to us directly.

So, my team and I build the following new functionality.

If a user says that they’d recommend my product to friends, we’ll now surface a third screen that looks like the following:

And, if a user says that they didn’t enjoy the product, or they wouldn’t recommend the product, we’ll now surface a fourth screen that looks like the following:

How hard could it be to test this new functionality?

You’d be surprised. We’ve just introduced lots of hidden complexity. Let’s break down that complexity case-by-case.

Test Cases to Cover

Let’s jump back in time and consider our initial feature. At that point in time, I needed to test the following flows:

- Yes on first screen, yes on second screen, exit back to product

- Yes on first screen, no on second screen, exit back to product

- Yes on first screen, skip on second screen, exit back to product

- No on first screen, exit back to product

- Skip on first screen, exit back to product

Once I added the social sharing screen, my test cases now look like this:

- Yes on first screen, yes on second screen, Twitter share, exit back to product

- Yes on first screen, yes on second screen, Facebook share, exit back to product

- Yes on first screen, yes on second screen, skip sharing, exit back to product

- Yes on first screen, no on second screen, exit back to product

- Yes on first screen, skip on second screen, exit back to product

- No on first screen, exit back to product

- Skip on first screen, exit back to product

Note that I’ve now introduced 2 new test paths, and modified 1 of my existing test paths.

And once I added the feedback on top of that, my test cases now look like this:

- Yes on first screen, yes on second screen, Twitter share, exit back to product

- Yes on first screen, yes on second screen, Facebook share, exit back to product

- Yes on first screen, yes on second screen, skip sharing, exit back to product

- Yes on first screen, no on second screen, provide feedback, exit back to product

- Yes on first screen, no on second screen, skip feedback, exit back to product

- Yes on first screen, skip on second screen, exit back to product

- No on first screen, provide feedback, exit back to product

- No on first screen, skip feedback, exit back to product

- Skip on first screen, exit back to product

Note that I’ve now introduced 2 more test paths, and modified 2 of my existing test paths.

We haven’t even talked about the testing of the feedback box itself!

For example, what if someone inputs non-English characters? What if someone leaves it blank and tries to submit? What if someone tries to maliciously inject code through the feedback box?

Also, when we test, we shouldn’t just test the behavior of the product. We should also consider whether we’re storing data correctly.

Are we capturing feedback correctly? Did we correctly track that someone shared, and through what channel? If someone skipped, did we capture which screen they skipped at?

One final thought here: too often, I see people not testing the skip functionality itself once the new screens have been introduced. Even though skip behavior wasn’t touched as part of the requirements, it’s possible that the new flow will impact the way the skip works.

Test Case Automation

Our simple illustration above demonstrates that even “trivial” changes can lead to dramatically increased testing requirements. So how do product teams stay sane, given that every change exponentially increases complexity?

One way is to work alongside your engineering team to implement automated tests. Essentially, as engineers write new functionality, they’ll also use standardized tests to ensure that nothing breaks without their knowledge.

The magic of automated tests is that your team can run them as frequently as they’d like. I’ve generally observed that teams run the automated test suite before merging the code into the broader codebase, and right before releasing into a production environment.

Summary

Great product managers understand not just the user pain that they’re solving, but also the increased complexity that they’re introducing to the system. By understanding the downstream impact, great product managers increase the resilience of their products.

When you build out new functionality, work with your team to document the new user paths that you’re introducing. From there, ensure that each path gets tested, whether manually or automatically. On top of that, remember to test the previously existing paths as well – too often, we don’t check to ensure that we haven’t introduced a regression.

By being mindful of complexity and by writing test cases, we ensure that users will have great experiences with our products!

Have thoughts that you’d like to contribute around test cases? Chat with other product managers around the world in our PMHQ Community!

Join 30,000+ Product People and Get a Free Copy of The PM Handbook and our Weekly Product Reads Newsletter

Subscribe to get:

- A free copy of the PM Handbook (60-page handbook featuring in-depth interviews with product managers at Google, Facebook, Twitter, and more)

- Weekly Product Reads (curated newsletter of weekly top product reads)

Clement Kao has published 60+ product management best practice articles at Product Manager HQ (PMHQ). Furthermore, he provides product management advice within the PMHQ Slack community, which serves 8,000+ members. Clement also curates the weekly PMHQ newsletter, serving 27,000+ subscribers.